In the 2010 Tamil blockbuster Enthiran (Robot), Rajinikanth’s humanoid creation falls in love with a woman and spirals into emotional chaos, a sci-fi fever dream with romance, rebellion and silicon soul-searching. In 2013, Spike Jonze’s Her softened the premise: a lonely man falls deeply in love with an AI voice assistant. And somewhere between these two, Black Mirror warned us of eerily human replicas that resurrect the dead with harvested digital memories.

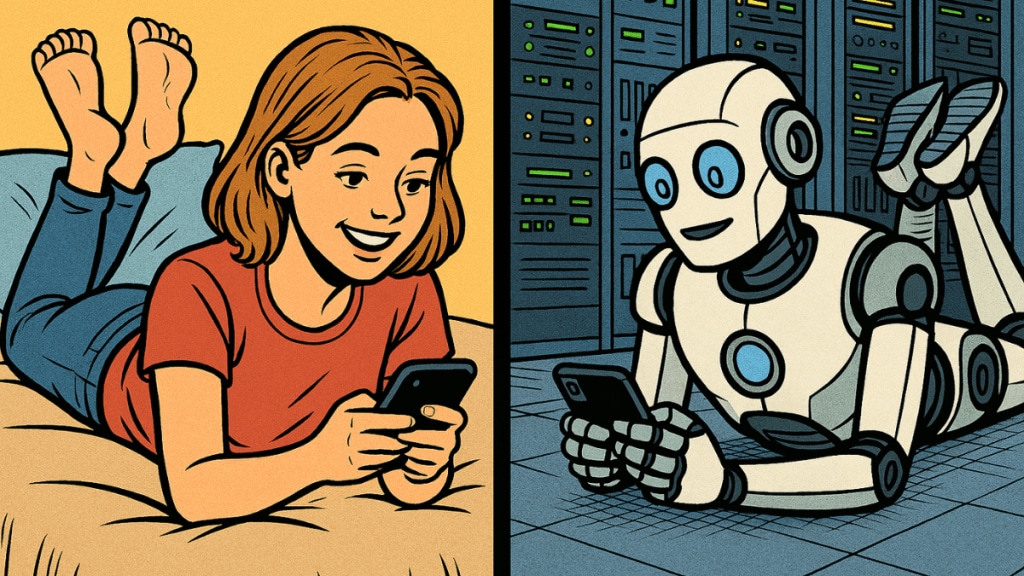

Today, these visions are no longer confined to screenplays. Millions across the globe are turning to AI companions for emotional connection, comfort, and even love. Platforms like Replika and Character.AI have seen explosive growth, with both witnessing 1 crore+ downloads on the Google Play Store alone, offering users digital partners that listen, remember, respond, and, crucially, never judge. But in this brave new world of algorithmic affection, the line between tool and partner is blurring, raising urgent questions about mental health, data ethics, and what it means to feel truly connected.

Companionship on demand

Loneliness is no longer a fringe condition; it is a global health crisis. AI companions have emerged as digital salves for frayed human bonds. “Several converging trends are driving the adoption of AI companions,” Dikshant Dave, CEO of Zigment AI, told financialexpress.com. “AI companions offer 24/7 availability, zero judgment, and a safe space to offload thoughts. For many, it’s easier to speak to an entity that won’t interrupt or react emotionally. In contrast to traditional self-help tools or even therapy, AI companions provide a low-friction, always-on alternative that feels both novel and intimate.”

For many users, this novelty is not frivolous. It fills a gap left by fading community structures, mental health taboos, and fractured families. “There’s also a broader societal undercurrent, loneliness, stress, and mental health strains are rising, while access to human support systems remains uneven. AI companions, while not a substitute for human relationships, are filling emotional and conversational gaps,” Dave added.

AI companions are not merely chatbots. They are customised digital personas that remember conversations, mirror moods, and offer empathy on command. Some are flirtatious, some are intellectual, and some are designed for emotional intimacy. “The psychological need is for acceptance and companionship. Causes and needs vary across age groups and cultures. For the shy, anxious, depressed, displaced, socially divergent, or sexually different (LGBTQ), it is comprehensible,” Dr. Koushik Sinha, Professor of Psychiatry at AIIMS, commented.

What makes them uniquely compelling, Sinha argued, is the control they offer. “AI companions are available 24×7, as per the need of the user, to be summoned and dismissed at will. They never argue, oppose and are programmed to be confirmatory (yes, sir!).” But therein lies the concern. “They do not have a will, need, priority or a definite moral-social-ethical viewpoint of their own,” he added. “Repressed user needs for dominance and submission, reward without accountability, this gets reinforced.”

Between comfort and concern

Mental health professionals remain divided on whether AI companions help or hurt. On one hand, they can be a gateway to care. “AI companions are increasingly meeting vital psychological needs such as emotional validation, the desire to be heard, and relief from loneliness and anxiety. People often turn to AI when human relationships feel distant, overwhelming, or unavailable.” Sushmita Upadhaya, clinical psychologist at LISSUN, noted.

She sees strong parallels between AI companionship and parasocial relationships, where people form one-sided emotional attachments to celebrities or fictional characters. “Like parasocial bonds, AI companions can provide comfort but may also encourage unrealistic expectations and dependency,” she said.

A 2025 study published in Computers in Human Behaviour Reports noted that romantic-AI companions can evoke real feelings of intimacy, passion and commitment, mirroring the components of Sternberg’s Triangular Theory of Love. But that emotional realism comes with psychological risks. As the study warns, sustained interaction may result in over-reliance, manipulation, and even the erosion of human relationship standards. Young people, in particular, are at risk of developing distorted views of relationships. “This could hinder emotional intelligence and the ability to form meaningful human bonds,” Upadhaya added.

What’s at stake?

While the potential for AI to act as a first responder in mental health is real, so is the possibility of harm. “There is a growing risk around ‘hallucinated empathy’, when an AI offers advice or emotional support that leads to harm,” Dave highlighted. “These systems are evolving quickly, but the reality is nuanced. In many cases, conversations are used to fine-tune models or develop new features. Consumers should be aware of how long their data is stored, whether it’s shared with third parties, and how it is used,” he added.

The stakes are particularly high for emotionally vulnerable users. Dave further reiterated that if users feel betrayed, whether because their data is leaked, misused, or commercialised without clarity, trust can collapse rapidly

Sanjay Trehan, a digital and new media advisor, sees AI companionship as both a symptom and a reflection of modern society’s breakdown in trust and intimacy. “People are seeking AI companions essentially due to three reasons: increasing isolation and loneliness in society, growing alienation with the social fabric and its tenuous threads, and the sheer novelty of the idea to have AI don a humanoid form and become one’s friend,” Trehan said. “The space is replete with possibilities, from emotional support to simulated relationships like ‘realistic AI girlfriends.’”

He added that while AI may offer short-term solace, the long-term consequences are difficult to ignore. “It may lead to long-term dependence and delusion, and that is counterproductive. This opens up a broader ethical question: How far is right?” Trehan sees promise in fields like education and entertainment, AI as a tutor or a co-creator, but warns against letting technology become a crutch for emotional connection. “We need to deploy it to our advantage and not get overly obsessed with it.”

A reality check

Governments are slowly waking up to the risks. The European Union’s new AI Act has classified emotional recognition systems as “high risk.” Countries like Italy banned certain apps over privacy and safety concerns, but later reversed the order. In the US, states like New York and Utah are mandating disclosures and safeguards for AI mental health tools.

India, for now, is cautiously observing. Dave noted that brands are quietly exploring the space. “Indian users are rapidly adopting AI-driven engagement tools, especially in wellness, coaching, and entertainment. But culturally, emotional expression in digital spaces is still evolving. Trust remains a significant barrier,” he said.

For all their responsiveness and convenience, AI companions remain fundamentally artificial. They are tools, powerful, persuasive, but ultimately programmed. “Despite their benefits, AI companions cannot replace genuine human emotional support,” Upadhaya said. “True relationships involve mutual understanding, shared experiences, and emotional growth, qualities AI cannot authentically provide.”

In the end, AI companions may reflect our desires, but they cannot fulfil them entirely. As Sinha puts it: “If society outsources emotional life to a machine, we risk forgetting how to be with one another.” Much like Robot or Her, AI companions reveal a truth about us, not them. The real question isn’t whether machines can love us, but whether we’re prepared to love ourselves enough to stay human.