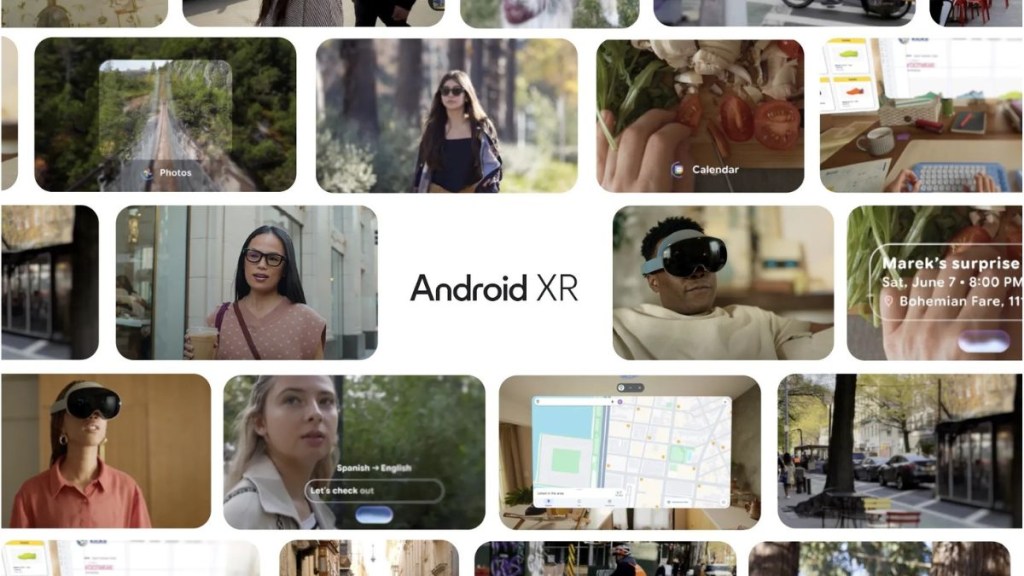

At its annual Google I/O Developers Conference in California, Google unveiled its Android XR Glasses. Created through a strengthened collaboration with Samsung, the AI-driven glasses integrate Gemini technology into wearable eyewear. Google is also teaming up with fashion-forward eyewear companies such as Gentle Monster and Warby Parker to craft the latest generation of smart glasses.

At #GoogleIO, we shared how decades of AI research have now become reality.

— Sundar Pichai (@sundarpichai) May 20, 2025

From a total reimagining of Search to Agent Mode, Veo 3 and more, Gemini season will be the most exciting era of AI yet.

Some highlights 🧵 pic.twitter.com/2n9rbGNj0Q

Google has also officially begun rolling out its highly awaited Gemini Live feature for both Android and iOS platforms, enhancing its AI assistant with real-time visual recognition capabilities. This functionality is accessible through the Gemini app.

Google Unveils Android XR Smart Glasses Powered by Gemini at I/O 2025:

At Google I/O 2025, the tech giant revealed its vision for the future of wearable technology with the introduction of Android XR smart glasses powered by its Gemini AI. In partnership with Samsung, Gentle Monster, and Warby Parker, Google is working on sleek, AI-integrated eyewear. The company also expressed interest in expanding its collaborations, with Kering Eyewear among potential future partners to broaden the variety of available styles.

These innovative glasses are equipped with built-in cameras, microphones, and speakers, functioning in tandem with a connected smartphone to deliver hands-free assistance. A subtle in-lens display is available as an optional feature, providing real-time information exactly when needed. With Gemini’s integration, the glasses can interpret the user’s surroundings through visual and audio cues, enabling contextual responses and personalized memory assistance throughout the day.

Gemini Live Brings Real-Time Visual Understanding to Google Assistant:

Gemini Live introduces a powerful new capability that allows Google’s assistant to analyze and react to visual data captured through smartphone cameras instantly. During live demonstrations, Google showcased how the assistant could interpret environments on the fly, spotting errors and correcting intentionally deceptive information as users navigated outdoor settings.

Gemini 2.5 Pro is taking off 🚀🚀🚀

— Google Gemini App (@GeminiApp) March 29, 2025

The team is sprinting, TPUs are running hot, and we want to get our most intelligent model into more people’s hands asap.

Which is why we decided to roll out Gemini 2.5 Pro (experimental) to all Gemini users, beginning today.

Try it at no… https://t.co/eqCJwwVhXJ

Google has shared its ambition to evolve Gemini into the world’s most intelligent, personalized, and proactive AI assistant. The company is also rolling out advanced features that enable it to process a combination of visual, auditory, and contextual inputs seamlessly across various apps and devices.