Tech giants Amazon and Microsoft have announced a combined $52.5bn (₹4.7 lakh crore) investment plan for India.

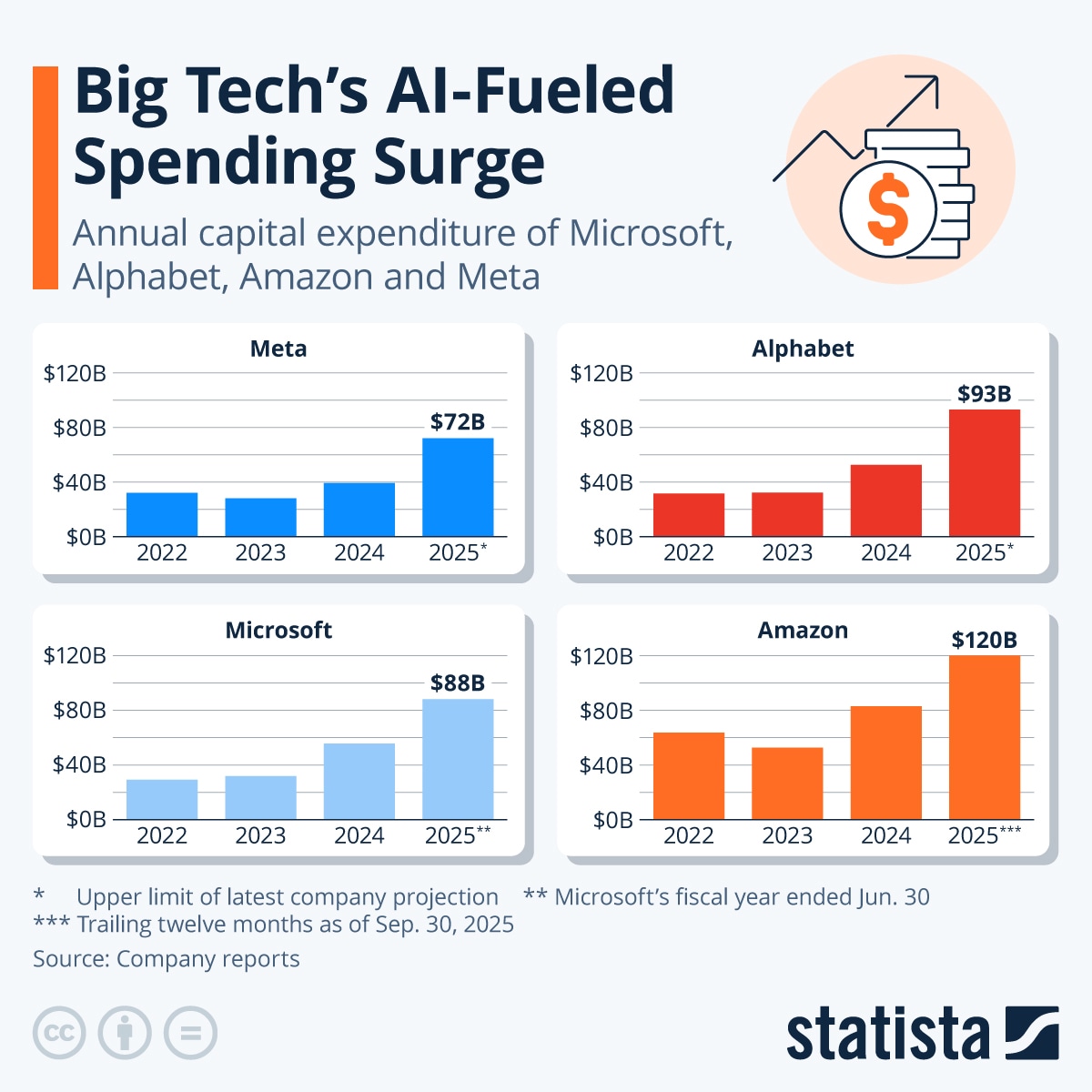

Big as that sounds, it is just a sliver of what the world is now pouring into AI infrastructure. Across the US, Europe, the Gulf and China, every major technology company and AI lab, including Microsoft, Amazon, Google, Meta, OpenAI, Nvidia, Oracle, and even GPU cloud upstarts, is driving a capex wave that will cross $400 billion in 2025.

To make that more relatable: $400 billion is more than the annual revenues of Reliance, TCS, Infosys, HDFC Bank and ICICI Bank combined. It is also roughly equal to the GDP of Denmark. For an industry that once scaled with code and minimal physical assets, this shift towards concrete, chips, land and power is dramatic.

And if that’s not mindboggling, read this. OpenAI alone expects to spend US$ 1.4 trillion over the next few years to build out the infrastructure it needs to deliver on its AI ambitions.

So the real question is: what exactly are these companies seeing that justifies this level of spending? Is this the logical early build-out of a new computing era, or a trillion-dollar bet made ahead of actual demand?

The $25,000 Chip Reality: Why Costs Are Exploding

The easiest way to understand this spending boom is this: AI needs a lot of computers, and the world simply does not have enough of them right now.

Every time you use an AI chatbot or an AI tool at work, thousands of powerful chips are working in the background. Training a single advanced AI model today can cost tens of millions of dollars, and running it every day for millions of users costs even more.

This is why companies are buying chips at an unbelievable scale.

An Nvidia AI chip costs around $25,000 each, and tech giants are buying hundreds of thousands of them. On top of that, building one modern data centre – the place where all these chips sit, costs $1–2 billion. And you need dozens of these centres to run AI globally. So very quickly, the math adds up to hundreds of billions.

But there is another reason for this rush: nobody wants to be left behind.

If Microsoft builds more AI capacity, Google cannot afford to slow down. If Amazon expands AWS, Meta has to follow. OpenAI, even though it does not run its own data centres, is signing multi-year cloud deals worth tens of billions of dollars to make sure it always has enough compute power.

Even smaller GPU cloud firms like CoreWeave have suddenly raised billions because everyone wants more AI compute than the market can supply.

In simple terms, whoever has more computing power will be able to build better AI. At least that’s what the theory suggested till the Chinese AI model, DeepSeek, came along. DeepSeek showed how far fewer, older generation, chips could deliver a model that compares with the best in the world. That one fact shook the markets across the world, albeit briefly.

It appears companies are once again back to the bigger is better theory. The belief is that whoever builds better AI will control the next big shift in technology. This is why the spending looks like a race – because it is one.

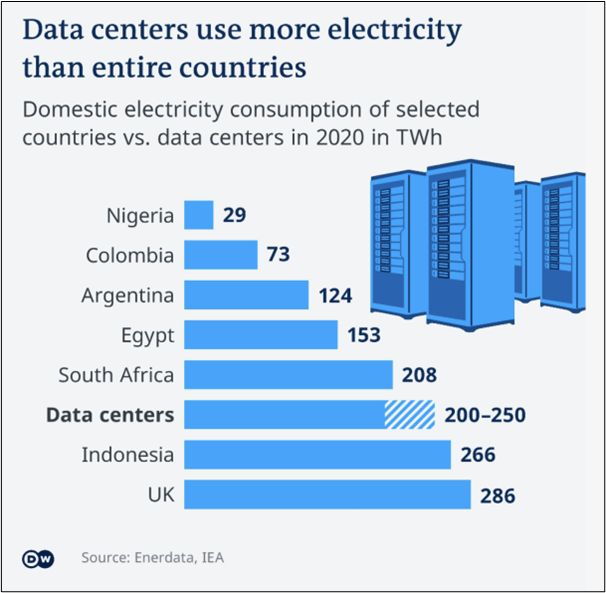

There is also a much bigger economic story behind it. In the US, the boom in data-centre construction has pushed up GDP growth. Countries in the Middle East are putting sovereign money into AI infrastructure. China is building over 250 AI-focused data centres in the next two years. So this is no longer just a “Big Tech thing”, but the entire world is preparing for an AI-heavy future.

But here is the part where the story gets tricky: the money AI brings in today is nowhere close to the money being spent. AI-software/services related revenue is maybe $50–60 billion today, while the infrastructure spending is almost $400 billion. That is a big gap.

So why spend so much now? Because these companies believe AI will soon be used everywhere in search, offices, customer service, healthcare, finance, transportation, and daily life. If that happens, this investment will look smart. If it does not, some of this infrastructure may sit underused.

In short: Tech companies are building for the future, not for today. And the world will soon find out whether that future is as big as they expect.

The Timing Trap: Building Capacity or Burning Cash?

The debate around this AI infrastructure boom really comes down to timing. On paper, the spending looks early. The money AI brings in today is still small, perhaps $50–60 billion across the biggest companies (not counting the hardware companies like Nvidia), while the global capex on AI infrastructure is six to seven times higher.

That mismatch makes many wonder whether the world is building capacity long before real demand arrives. It feels a bit like laying thousands of kilometres of fibre in the early 2000s, only to watch usage grow much more slowly than expected.

But the counterargument is equally strong.

AI is a compute-first technology, and the industry has hit a point where you cannot launch the next wave of AI products unless the infrastructure exists beforehand. Every new model is significantly larger, every enterprise customer wants more AI features, and every consumer app seems to be adding AI in some form. If companies wait for demand to show up clearly, they will fall behind competitors who built early.

This fear of being late especially after watching how cloud, mobile and social scaled in previous cycles is driving much of the urgency.

There is also a practical layer to this. GPUs are scarce. Power is scarce. Land and data-centre capacity are scarce. If Microsoft or Amazon do not secure them now, they simply may not be available later. Even OpenAI, which does not own its own data centres, has locked itself into multi-billion-dollar cloud contracts because it cannot afford to run out of compute. In that sense, spending is not only about optimism but also about protecting future access.

So the timing question has no clean answer. It is early if you look at today’s revenues, late if you look at the pace of AI adoption, and inevitable if you believe AI will become foundational. Big Tech is choosing to bet on that third view and is building the world that view requires.

The Energy Ceiling: When Power Becomes Scarce

The biggest risk is that the money arrives slower than the infrastructure.

Tech and AI companies may spend $400 billion on AI infrastructure this year, while AI-related revenue today is much lower. If adoption does not scale fast enough, a lot of this new capacity could sit underused, while still consuming huge amounts of power and requiring expensive hardware refreshes. That becomes a drag on profits.

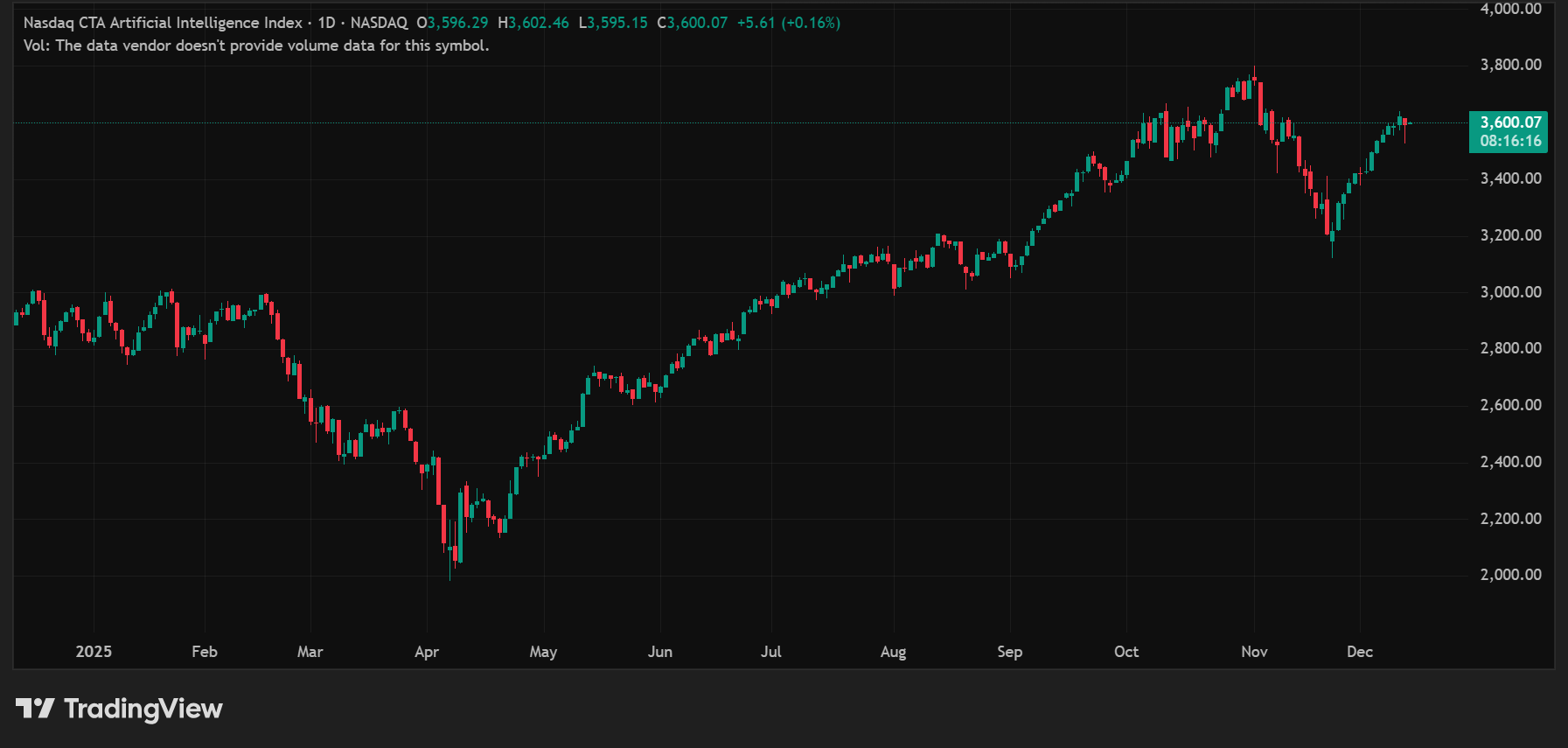

And if expectations of future profits were to disappoint, then there could be big implications for valuations of AI darlings like Nvidia.

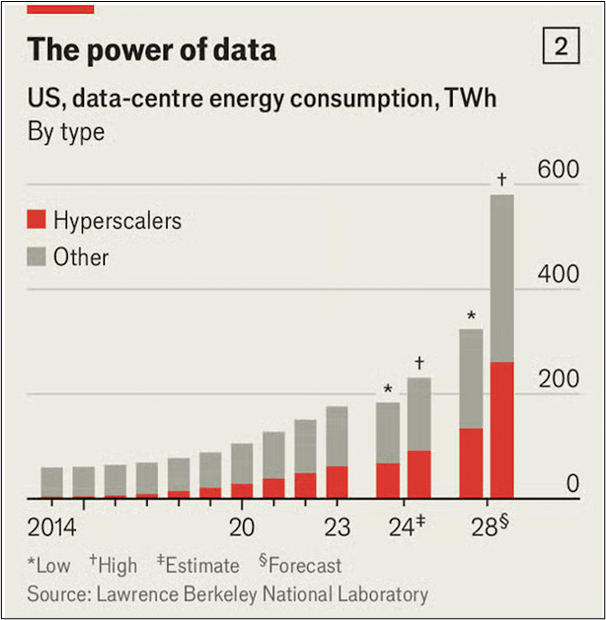

Then, there is also the energy and resource problem.

AI data centres use enormous electricity and water. Some forecasts suggest AI centers alone could take up 4–6% of US power by the early 2030s. If power prices rise or governments impose limits, the economics could weaken further. Regulation is another risk: if governments slow down AI deployment over concerns about misuse or safety, demand will not grow at the pace companies expect.

In a worst-case scenario, the whole AI infrastructure build out binge could end badly for everyone…right from the companies that make the AI chip, to the investor who bet on the AI theme.

But the opposite outcome is equally possible. Even modest productivity gains say 5–10% across white-collar work, can add trillions to global output over a decade. In that world, today’s investments will look like basic digital infrastructure, much like fibre networks did once smartphones took off. Countries that attract these data centres and chip plants could see new jobs, higher tax revenues and innovation clusters forming around them.

For companies, the upside is clear: owning AI compute becomes like owning toll roads. Everyone else will have to rent capacity. That is why Microsoft, Amazon, Google, Meta and OpenAI are willing to take a hit on free cash flow now as they want to control the foundation of the next big tech cycle.

A lot of this optimism is already built into the stock prices of the AI stocks. Here is a one year chart of the NASDAQ CTA AI Index clearly reflecting that.

Betting on a Future That Isn’t Fully Here Yet

The world is essentially building the rails before the train arrives. It could turn out to be premature, or it could be the smartest bet of the decade.

If AI adoption accelerates, the companies building early will dominate. If not, this will go down as one of the most expensive misjudgements in tech.

Right now, Big Tech is choosing to believe that the upside is worth the risk and global economies are being reshaped around that belief.

Author Note

Note: This article relies on data from fund reports, index history, and public disclosures. We have used our own assumptions for analysis and illustrations.

Parth Parikh has over a decade of experience in finance, research, and portfolio strategy. He currently leads Organic Growth and Content at Vested Finance, where he drives investor education, community building, and multi-channel content initiatives across global investing products such as US Stocks and ETFs, Global Funds, Private Markets, and Managed Portfolios.

Disclosure: The writer and his dependents do not hold the stocks discussed in this article.

The website managers, its employee(s), and contributors/writers/authors of articles have or may have an outstanding buy or sell position or holding in the securities, options on securities or other related investments of issuers and/or companies discussed therein. The content of the articles and the interpretation of data are solely the personal views of the contributors/ writers/authors. Investors must make their own investment decisions based on their specific objectives, resources and only after consulting such independent advisors as may be necessary.