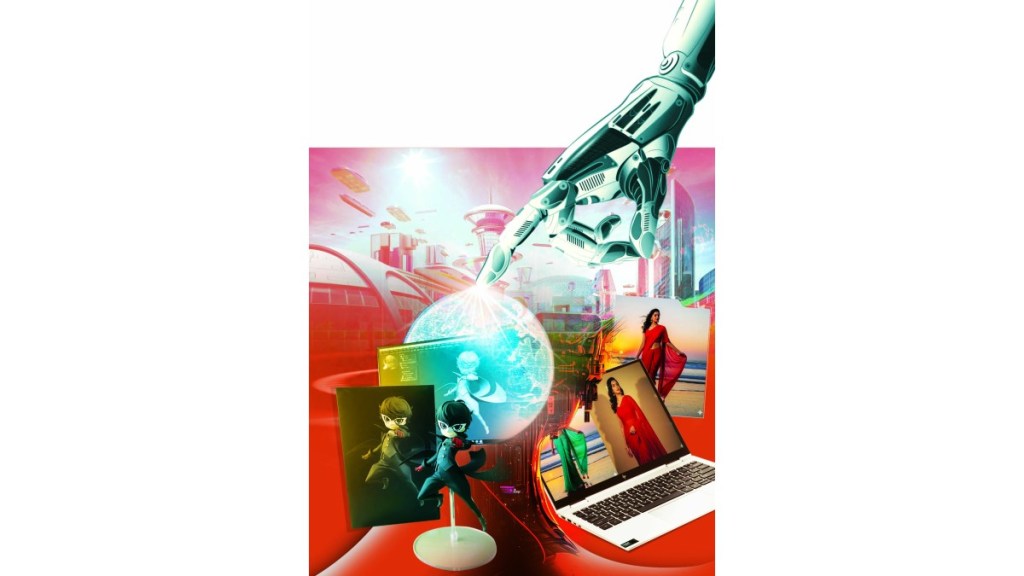

In a world where a simple online prompt can give you pictures, videos, audios, and any number of generated content at the snap of a finger, a twofold impact is typically seen. That of a tidal wave of similar artificially generated content being created, produced and shared over multiple platforms, along with the risks of deepfake and copyright claims rising to the forefront. Do new trends like Gemini’s 3D model trend, the AI retro saree trend and the couple trend from the Nano Banana 2.5 Flash Image generator also come with its risks?

Nano Banana effect: India’s viral love for AI, and the privacy cost

India had reportedly charted the highest number of users of Nano Banana. Between January and August, the app saw an average of 1.9 million monthly downloads in the country, about 55% higher than in the US, accounting for 16.6% of global monthly downloads, reported TechCrunch in September. David Sharon, multimodal generation lead for Gemini Apps at Google DeepMind, confirmed this, speaking at a public event that India has emerged as the No. 1 country in terms of Nano Banana usage as of now.

However, the number of complaints are also high. An IPS officer from Telangana issued a warning on the pitfalls of uploading personal images to unknown websites via an X post, “Be cautious with trending topics on the internet!…Falling into the trap of the ‘Nano Banana’ trending craze… if you share personal information online, (such) scams are bound to happen.” He goes on to add, “Never share photos or personal details with fake websites or unauthorised apps…These trends come and make a fuss for a few days before disappearing… Once your data goes to fake websites or unauthorised apps, retrieving it is difficult. …Remember, your data, your money — your responsibility.”

Nearly all of these customised AI content generation tools face a mix of backlash and acceptance. The adoption of the aforementioned image generation trends can be seen widely over Instagram and even Facebook, with people not only posting AI generated images of themselves as 3D model action figures or clad in retro saree looks, but also with people posting images of themselves in romantic settings with their favourite movie stars. Realistic pictures of regular social media users hugging or holding hands with celebrity heartthrobs are widely doing the rounds. You’d never know the difference between an AI generated image or a real polaroid photo. A pertinent reason for why deepfake videos and images of celebrity personalities are found a dime a dozen is because several different content clips featuring them exist all over the internet space.

This makes it far easier for AI generators to create further content featuring their likeness, as there is more context and background material to draw from. Social media users put themselves at similar risk by uploading their personal images to different websites, without adequate due diligence.

Beyond the image: How AI tools are eroding identity and mental health

Currently, the USA is also seeing lawsuits against AI voice generators, as several of them are using celebrity voices to create AI bots or audio clips, opening up the potential for identity fraud and copyright concerns once again. The use of an audio generator called Character.AI has gotten particularly popular in the US, with users building relationships and having legitimate personal interactions with chatbots sounding like their favourite celebrity crushes.

Pedro Pascal, Harry Styles, and Ryan Gosling among others stars are all unwittingly playing the romantic interest in many women’s lives, keeping the spark alive through voice notes and text messages.

As reported in an article by The Cut, women make up over half of Character.AI’s 20 million users. Last year, Character.AI was sued by the parents of a teenager who died by suicide after having sexualised interactions with a chatbot of a Game of Thrones character.

Such image and video generators are not only a threat as far as erosion of privacy and credibility is concerned, but they can also impact the self esteem of the user.

The gaining ‘retro saree trend’ has received some negative backlash on this front, with netizens saying that not only do these images look undeniably fake, they also often look very little like the subject of the picture. With flowing hair, airbrushed skin, no wrinkles, the images show an unrealistic depiction of a woman in a saree, many have said.

ChatGPT, Google Gemini, Grok, Midjourney, Ideogram, and Adobe Firefly, among others, are popular systems used for images; Character.AI, Lovo.AI, Speechify, Wellsaid, Hume, DupDub and ElevenLabs are touted as the best AI audio and voice generators out there as of now. With the impressive rate at which artificial intelligence content generators are being launched, and the umpteen number of causes that they are being utilised for, it is harder to identify a fake than it is to identify an original piece of content.

AI generated content is the internet’s shiny new toy that everyone wants to sample. Like all trends, perhaps this too shall pass. What remains to be seen is whether it will leave positive takeaways in its wake, or will it leave a trail of damage control.