Among the new tools Facebook has been working on to roll out, the company has added AI-enabled tools for admins of chat groups. According to the company, the new set of tools will let the admin know about messages, posts of some members who have contributed to any kind of conflict or led any unhealthy conversation.

“Sometimes contentious conversations do come up, and so keeping groups safe is a priority for Facebook, as well as for admins who play an important role in maintaining safety and desired culture in communities. We’re testing and rolling out several new admin tools to support moderating conversations and managing conflict,” Facebook said in a blog post. According to the post, the company will add comment moderation to Admin Assist, which has been powered by AI.

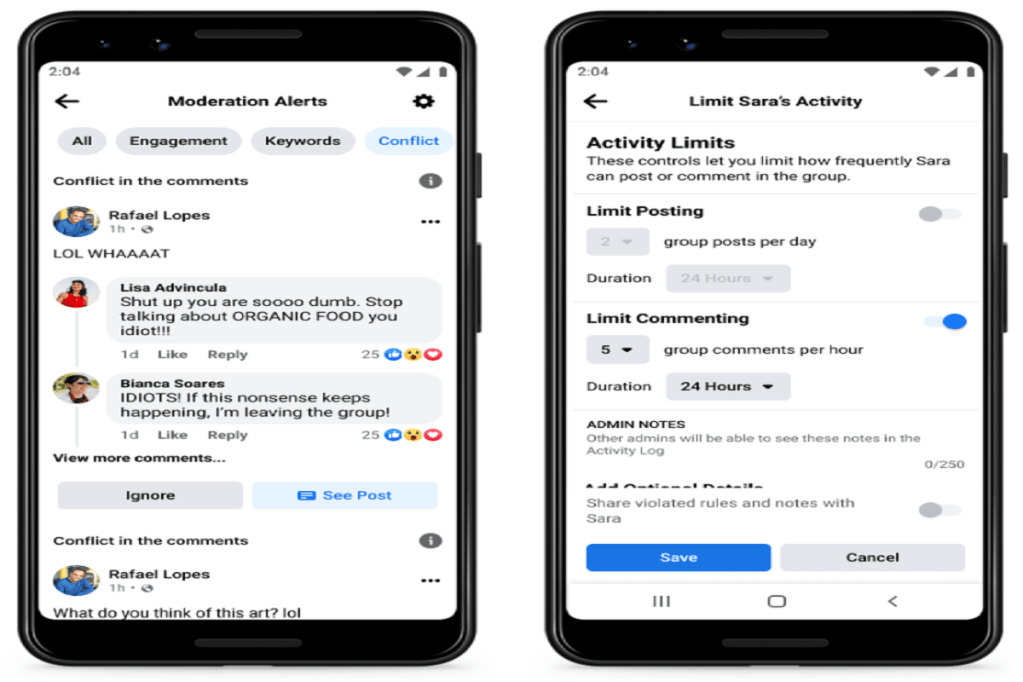

The tool is called Conflict Alerts, and since the feature is currently in the testing phase, Facebook has not said when it will be available. The feature seems to be similar to the existing Keywords Alerts feature that alerts admins regarding some commenters when they use certain words and phrases. The new feature using machine learning models will be able to spot more words that are somewhat subtle. Once the words are identified, the admin will be alerted and depending on the severity for words, sentences, actions can be taken. These actions include deleting comments, putting a cap on how often individuals can comment, booting users from a group, among others.

To be sure, many details have not been revealed by the company on this feature. Apart from this, the company has also introduced some new functions and this includes the admin homepage. Acting as a dashboard, this feature will let admins have an overview of “posts, members and reported comments” along with new member summaries. The homepage will also show a compilation of “each group member’s activity in the group, such as the number of times they have posted and commented, or when they’ve had posts removed or been muted in the group.”