Last month, actors Rashmika Mandanna, Katrina Kaif, Sara Tendulkar and Kajol were victims of deepfake-driven trickery on social media. The four celebrities had their likenesses lifted, fabricated and dumped on Facebook and Youtube. All of them have carefully cultivated brand images and multibagger endorsement deals and were caught unawares by their AI-generated fakes.

While the Ministry of Electronics and Information Technology (MeitY) is looking to introduce new regulations or alter existing laws to address the deepfake danger, marketers aver such manipulation of celebrity imagery, style and voice poses a serious threat to the whole domain of celebrity endorsement. Manisha Kapoor, SG & CEO, ASCI, says, “The use of deepfake technology to create deceptive advertisements featuring celebrities may associate the latter with products or messages they vehemently disagree with, leading to reputational harm.”

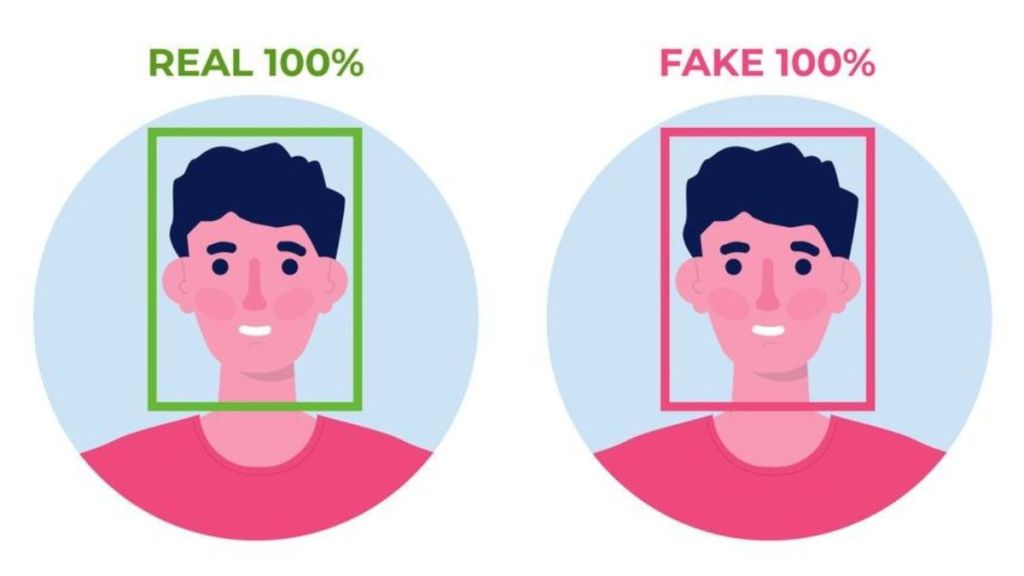

The problem is simple: Apart from the obscene morphed pictures, deepfake is also being used to falsely portray advertising claims. And the technology is so good that it is hard to tell the real from the fake and things are only going to get more difficult as it improves. Worse, with no deepfake detection or “management” tools to speak of as of now, it can take just minutes for a handful of black hats and bogus brands to siphon off the brand value of the likes of Sara-Deepika-Rashmika and disappear into the blue until it’s time to strike again.

The ASCI’s code prohibits the reference or use of a celebrity or person of repute, or an institution without their express consent. The current redressal mechanism requires concerned parties to file an FIR for action to be taken. The government has also mandated social media firms to take down any deepfakes within 24 hours of notice. Identity protection sites can also help remove deep fake content online. That apart, tech and internet firms are developing solutions to make it easier to detect and remove deepfakes.

The elephant in the room is that deepfakes can break trust, which takes years to cultivate, in minutes. With trust being the foundation of brand-customer relationships, this is deeply worrying.

So how must brands and celebrities ringfence their equity against rogue elements before they can cause serious damage?

Action better than reaction

Says Naresh Gupta, co-founder, Bang in the Middle. “Celebs need to become far more proactive—they now need to employ teams that will, in real-time, monitor social feeds and proactively look for removing offending pieces.”

Actor Anil Kapoor sought legal recourse in response to the proliferation of distorted videos, GIFs, and emojis bearing his appearance. He later secured a win in court against the unauthorised creation and use of AI imitations.

Till regulation is in place, Nisha Sampath, managing partner, Bright Angles Consulting, urges celebrities to not over-rely on digital media for image building, but also use offline media, which are less prone to fraud.

ASCI’s Kapoor adds that celebrities should integrate deepfake safeguards into communication management, using digital watermarking and legal strategies. Transparent PR campaigns reinforce authenticity, while tech-driven solutions enhance detection and prevention.

Creative folks also need to do what Anil Kapoor has done, create a legal firewall, Gupta adds. “They can’t trust the platform concerned to protect them—things are a bit fluid right now and brands and their endorsers need to overreact to this,” he says.

In the end, it boils down to collective attention. “Robust regulation, heightened public awareness, and advanced technological solutions for detecting and mitigating deepfakes are crucial. This approach is key to safeguard the privacy and reputation of individuals, especially public figures, at a time where truth and deception coexist,” says ASCI’s Kapoor.