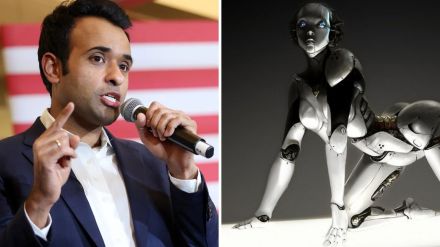

Former Republican presidential candidate Vivek Ramaswamy started an ‘AI vs Human’ debate on October 14 with a tweet cautioning against Artificial intelligence that masters in mimicking human emotions too closely. He warned that some AI features, while technically advanced, could worsen addiction and loneliness rather than improving productivity or lifestyle.

Vivek Ramaswamy sounds alarm on AI’s ‘Over-Humanisation‘

Ramaswamy on X wrote: “The unnecessary ‘over-humanisation’ of AI is becoming troubling. This new ‘feature’ will do nothing to improve productivity or prosperity.” He criticised the rapidly evolving ways AI is being designed to manipulate humans on deeply personal levels. “I don’t think government intervention will make it any better, but designing AI with the specific capability to sexually or emotionally manipulate humans warrants extreme caution,” he added.

Ramaswamy’s remarks were in response to a tweet by Lebanese-Australian entrepreneur Mario Nawfal, discussing OpenAI’s plans for a new feature called “Adult Mode.”

The unnecessary ‘over-humanization’ of AI is becoming troubling. This new “feature” will do nothing to improve productivity or prosperity. But it will almost certainly increase addiction & loneliness. I don’t think government intervention will make it any better, but designing AI… https://t.co/aw5Ycy2JOm

— Vivek Ramaswamy (@VivekGRamaswamy) October 15, 2025

Ramaswamy’s tweet started a debate on social media. Some users agreed that AI over-humanisation risks loneliness and emotional manipulation, while others argued adult users should have the freedom to explore AI features responsibly. One user wrote: “The closer machines mimic emotion, the more we risk forgetting our own. Progress should serve the soul, not replace it.” Another spoke about potential benefits: AI could act as a companion or even a supportive presence for mental health or relationship guidance.

ChatGPT’s ‘Adult Mode’

From December onwards, OpenAI will roll out a new feature allowing verified adult users to access more mature content on ChatGPT, including erotica. CEO Sam Altman explained this step as a way to “treat adults like adults.” The feature will allow the chatbot to be more expressive, flirtatious and can get really personal if the user opts for it.

This move comes after years of restrictions designed to protect users dealing with mental health vulnerabilities. OpenAI says the platform now has tools to mitigate risks and can ease limitations for responsible users.

Earlier this year, OpenAI was sued by the parent of a 16-year-old who died by suicide. Critics are concerned that allowing adult content, even behind age gates, could increase risks for vulnerable individuals. “We don’t even know if their age gating is going to work,” said Jenny Kim, a lawyer involved in tech-related mental health lawsuits, according to BBC. Meanwhile, survey data suggests one in five students reports some form of romantic interaction with AI.

Earlier, Elon Musk’s xAI launched a similar feature introducing sexually explicit chatbots in its Grok platform. California Governor Gavin Newsom recently vetoed legislation that would have blocked AI companions for children unless the software could guarantee safety.